As a seasoned Robotics Engineer with a passion for innovation, I bring a wealth of industry and research experience to the field. My expertise includes Humanoid Robotics, Machine Learning, Image Processing, SLAM, and Navigation with Advanced Control Systems, honed through my Master of Science in Robotics from Middlesex University.

At Expert Hub Robotics, I have served as a Senior Robotics Engineer and Project Lead, showcasing my skills in configuring SLAM and navigation systems on humanoid robots, designing custom attachments, developing AI-based Chatbot applications, and executing specialized software implementations. My experience also extends to the VLSI industry, where I worked as a Corporate Application Engineer and was involved in complex customer feature validation, product development, and hierarchical CDC flow validation.

My commitment to excellence has been recognized through several awards, including the Best Undergraduate Project Award from the IESL and the Best Technical Paper Award from the IET. With a desire to make a meaningful impact, I am eager to bring my industry expertise to the research field and contribute to the ongoing advancements in robotics.

Expertise

Computer Science

- Graph Construction

- Environment Mapping

- 3d Scenes

- Deep Reinforcement Learning

- Range Finder

Earth and Planetary Sciences

- Autonomy

- Cartography

- Position (Location)

Organisations

- Faculty of Geo-Information Science and Earth Observation (ITC)

- Scientific Departments (ITC-SCI)

- ITC-TECH (ITC-SCI-TECH)

Publications

2025

2023

Research profiles

Master of Science in Robotics,

- Middlesex University

Bachelor of Electrical and Electronics Engineering,

- University of Peradeniya

Affiliated study programs

Current projects

Finished projects

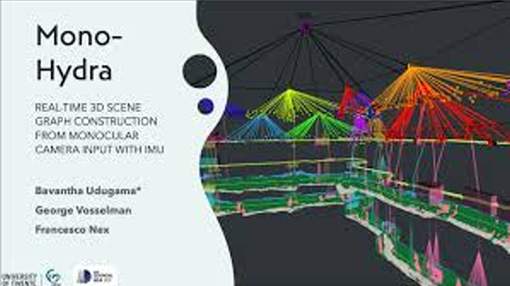

Mono-Hydra

MONO-HYDRA: REAL-TIME 3D SCENE GRAPH CONSTRUCTION FROM MONOCULAR CAMERA INPUT WITH IMU

The ability of robots to autonomously navigate through 3D environments depends on their comprehension of spatial concepts, ranging from low-level geometry to high-level semantics, such as objects, places, and buildings. To enable such comprehension, 3D scene graphs have emerged as a robust tool for representing the environment as a layered graph of concepts and their relationships. However, building these representations using monocular vision systems in real-time remains a difficult task that has not been explored in depth. This paper puts forth a real-time spatial perception system Mono-Hydra, combining a monocular camera and an IMU sensor setup, focusing on indoor scenarios. However, the proposed approach is adaptable to outdoor applications, offering flexibility in its potential uses. The system employs a suite of deep learning algorithms to derive depth and semantics. It uses a robocentric visual-inertial odometry (VIO) algorithm based on square-root information, thereby ensuring consistent visual odometry with an IMU and a monocular camera. This system achieves sub-20 cm error in real-time processing at 15 fps, enabling real-time 3D scene graph construction using a laptop GPU (NVIDIA 3080). This enhances decision-making efficiency and effectiveness in simple camera setups, augmenting robotic system agility. We make Mono-Hydra publicly available at: https://github.com/UAV-Centre-ITC/Mono_Hydra.

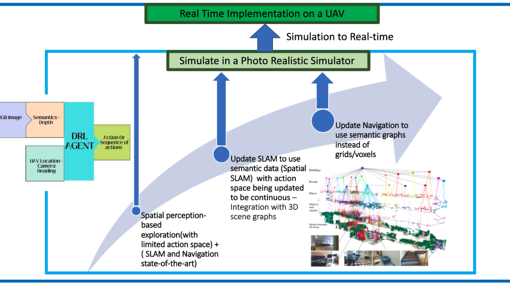

Vision-based mobile robot for reconnaissance

The objective was to create a 3D map of an unknown environment. It was tackled in 3 main fronts as a 3D- Vision system, an intelligent navigation system (INS) and holonomic robot platform with the localization algorithm. The vision system was developed at the basic level through fashioning two web cameras as a stereo camera pair. Laser ranging was used as the sensing method and a 2D map of the environment was obtained through the efficient guidance of the intelligent navigation system. A particle filter-based SLAM algorithm was implemented for the task localization a Kinect sensor was interfaced with the system for the task of extending the map to 3D.

Address

University of Twente

Langezijds (building no. 19), room 2305

Hallenweg 8

7522 NH Enschede

Netherlands

University of Twente

Langezijds 2305

P.O. Box 217

7500 AE Enschede

Netherlands

Organisations

- Faculty of Geo-Information Science and Earth Observation (ITC)

- Scientific Departments (ITC-SCI)

- ITC-TECH (ITC-SCI-TECH)